Kunlun open source 200 billion sparse large model Tiangong MoE, the world's first with 4090 reasoning...

DIYuan | 2024-06-04 17:14

【数据猿导读】 Kunlun open source 200 billion sparse large model Tiangong MoE, the world's first with 4090 reasoning

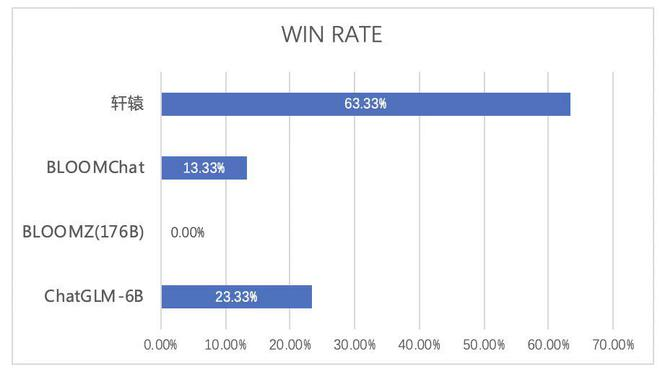

June 3 news, according to Kunlun official micro, Kunlun announced open source 200 billion sparse large model Skywork-MoE, strong performance, while reasoning costs are lower. Skywork-MoE is based on the intermediate checkpoint extension of Kunlun Open Source Skywork-13B model, and is the first open source 100 billion MoE large model that fully applies and lands MoE Upcycling technology. It is also the first open source 100 billion MoE model to support inference with a single 4090 server.

来源:DIYuan

声明:数据猿尊重媒体行业规范,相关内容都会注明来源与作者;转载我们原创内容时,也请务必注明“来源:数据猿”与作者名称,否则将会受到数据猿追责。

刷新相关文章

我要评论

不容错过的资讯

大家都在搜